The downsides of experimentation | Experimental Mind #191

Your overview of interesting reads, events and jobs for the experimental mind.

Hi, enjoy this edition full of interesting reads you might have missed, job opportunities, upcoming events, a quote to think about and something that made me smile.

➡️ 8 Top Google Optimize Alternatives with Pros, Cons, & Real Reviews

With less than 2 weeks before the sunset of Google Optimize you should be ready. If you are not, this article is for you: outlining the top contenders on the market, guiding you to make the best decision for your optimization needs. [read article]

🔎 Interesting reads you might have missed

The downsides of experimentation

Stash Sajin: ”I’m all for leaning into the scientific method to make products better. But we owe it to ourselves — and our users — to remember that experimentation isn’t the be-all and end-all. It’s a tool in a broader toolkit, and like any tool, its utility is defined by the skill and wisdom with which it’s used.”

How to run many tests at once: interaction avoidance & detection

On the latest TLC Call, Lukas Vermeer talked about interaction avoidance and detection: “People being worried about interaction effects is a problem to worry about”.

Statistical significance on a shoestring budget

Alexey Komissarouk: “Many startups experience a chicken-and-egg problem with growth: they want to run experiments to gain more volume, but lack the volume for experiments to be practical. … without the precision A/B testing, it’s that much harder to know which of your efforts are actually working, and which are neutral-to-harmful”

What is the difference between A/B testing and incrementality testing?

Trever Testwuide from Measured: “Not all incrementality tests are A/B tests, and not all A/B tests are incrementality tests. While both methodologies involve splitting sample audiences (or geographic locations) and testing different conditions, their objectives differ.“

Setting A/B test duration in a bayesian context

Xavier Gumara Rigol from Oda: “We’ve noticed there’s a lack of information on how to decide on A/B test duration within the Bayesian framework. With that in mind, we aim to bridge that gap in this article by presenting the principles we have agreed upon at Oda.”

[Paper] All about sample-size calculations for A/B testing

”This paper addresses this fundamental gap by developing new sample size calculation methods for correlated data, as well as absolute vs. relative treatment effects, both ubiquitous in online experiments.”

🚀 Job opportunities

Looking for a new challenge in experimentation? Find 100+ experimentation related jobs on ExperimentationJobs.com. These jobs are from all over the world, on-site, fully remote or hybrid. Take a look and start pivoting your career.

This week's featured roles:

Functional Engineer Advanced Analytics at AkzoNobel (Sassenheim, Netherlands)

Product Growth Analyst at Meta (Multiple locations, USA)

Senior CRO Analist at ClickValue (Amsterdam, Netherlands)

Experimentation Specialist at FINN (Oslo, Norway)

Conversion Rate Optimization Manager at IDFC First Bank (Mumbai, India)

Are you hiring? Submit here

📅 Upcoming events

18-22 Sep: Experiment Nation Conference (virtual)

19-21 Sep: Nonprofit Innovation & Optimization Summit (Dallas, USA)

21-22 Sep: Advances with Field Experiments (Chicago)

🆕 16-17 Oct: CXL Live (Austin, USA)

🆕 17 Oct GrowthHackers Conference (virtual)

🆕 26 Oct: Conversion Jam (Oslo/Stockholm)

See all experimentation related conferences & events 2023.

💬 Quote to think about

“Everyone's running experiments, but only some of them have control groups and randomization.” — Sean Taylor

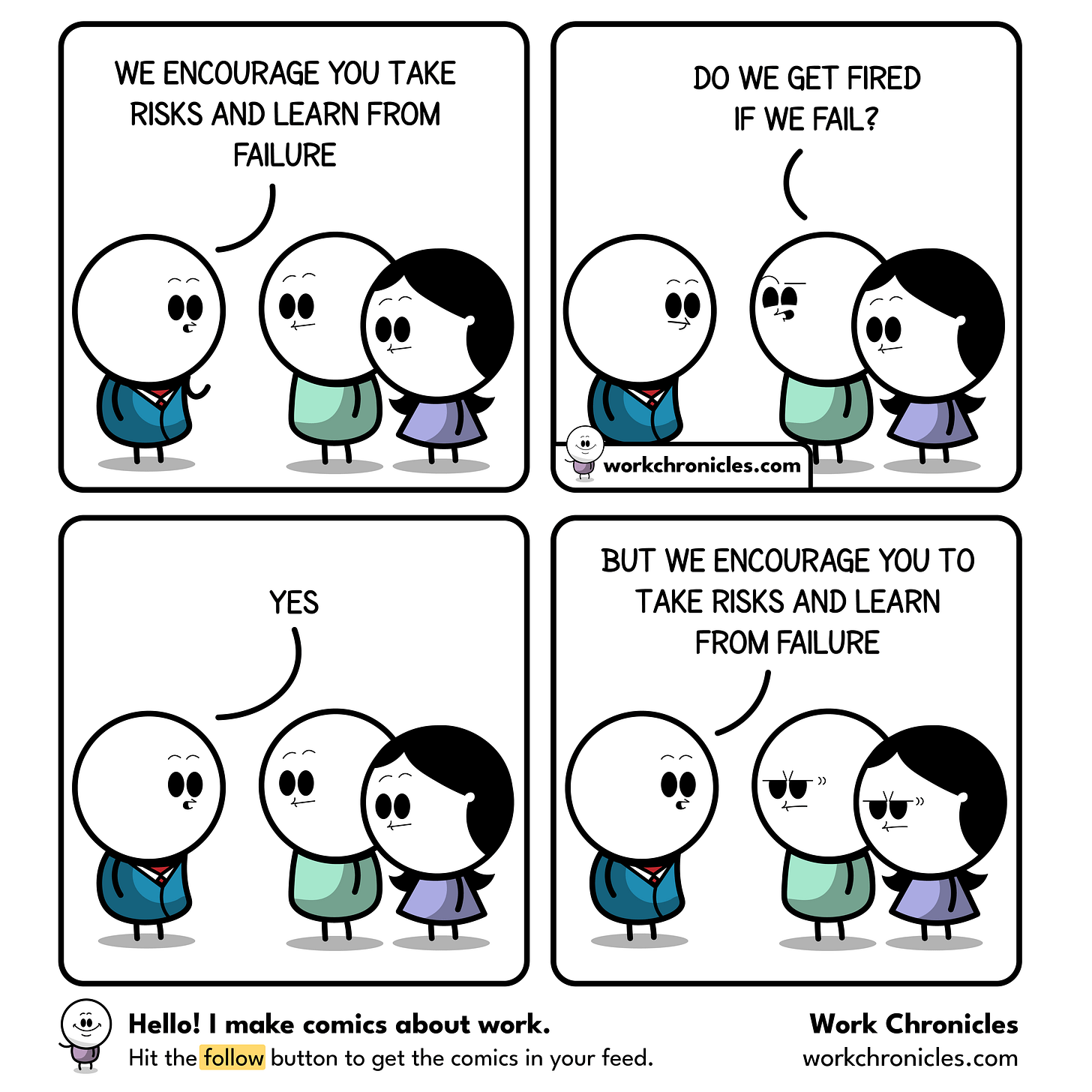

😅 This made me smile

Please take risks and learn from failure … but you can’t fail! From Work Chronicles.

👍 Thanks for reading

If you are enjoying the Experimental Mind newsletter please share this email with a friend or colleague. Looking for inspiration how to best do that? Take a look at what others are saying. Have a great week — and keep experimenting.